This article is about Artificial Intelligence

Confusion Matrix in Machine Learning

By NIIT Editorial

Published on 13/08/2021

7 minutes

Machine Learning or ML is a form of AI (Artificial Intelligence). ML allows different software applications to give more accurate predictions and outcomes without being specifically or explicitly programmed to do so. Machine learning algorithms use previous or historical data as their input and predict new output values for the same. The most common example of machine learning (ML) is recommendation engines. However, machine learning is widely used in spam filtering, fraud detection, malware threats detection, predictive maintenance, and BPA (business process automation).

Classification is the process that helps in categorizing the given set of data into various classes. In ML (Machine Learning), you have to frame the problem and then collect and clean that data to add the necessary feature variables, train that model, and then measure its performance to improve it using a cost function until it is ready to deploy.

However, the more pressing question is, how to measure the model’s performance, and using which particular feature?

The broad answer in this scenario would be to compare predicted and actual values. But, does this trivial answer solve the issue at hand?

Let us consider the problem using a sample dataset and try to examine the issue.

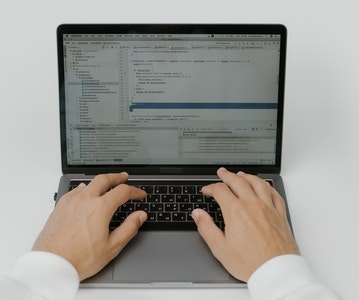

Python3

#IMPORTING LIBRARIES

import pandas as pd

from sklearn.model_selection import accuracy_score

from sklearn.metrics import confusion_matrix

from sklearn.metrics import train_test_split from sklearn.preprocessing import StandardScaler from sklearn.linear_model import LogisticRegression from sklearn.metrics import precision_score, recall_score

from sklearn.metrics import f1_score

#IMPORTING DATASET SAMPLE.CSV

sampledata = pd.read_csv('sampledataset.csv') sampledata.head()

# Let’s split the data into training set and test set.

y=sampledata['hit']

x=sampledata.drop('hit', axis=1)

x_train, x_test, y_train, y_test = train_test_split(x, y, test_size=0.33, random_state=44)

"""

The training set has already been shuffled for us, which is beneficial because it ensures that all cross-validation folds are comparable.

"""

#SCALING THE DATASET

scaler_type=StandardScaler()

scale=scaler_type.fit(x_train)

x_train=scale.transform(x_train)

x_test=scale.transform(x_test)

#CHOOSING LOGISTIC REGRESSION MODEL FOR PREDICTION

ml_model=LogisticRegression()

ml_model.fit(x_train,y_train)

prediction=ml_model.predict(x_test)

accuracy = accuracy_score(y_test,prediction)

Confusion Matrix

A confusion matrix is a technique used for summarizing and evaluating the performance of a classification algorithm.

Classification accuracy can sometimes be misleading especially if you have observations in each class that are unequal in number or if there are more than two classes in the dataset. Calculating a confusion matrix can help you understand what the classification model is getting wrong and what it is getting right.

On any classification problem, a confusion matrix gives a summary of the prediction results. The key to the confusion matrix is that the number of incorrect and correct predictions is usually summarized with assigned count values and then broken down by each class. A confusion matrix displays the different ways in which the classification model is confused while making predictions.

A confusion matrix not only provides information into the classifier's errors, but it also provides a comprehensive insight into the types of errors it makes, which is more important. It is basically the breakdown that overcomes the limitations that come with using the classification accuracy alone.

The evaluation of the performance of the classifier can be better done by looking at the confusion matrix. The whole idea is to record the number of instances where class A is classified as class B.

# Constructing the confusion matrix.

#using the prediction we got from the above code using LogisticRegression

confusion_matrix(y_test,prediction)

print("Confusion matrix") print(confusion_matrix(y_test,prediction))

An actual class is represented by each row of the confusion matrix whereas the predicted class is represented by each column.

The confusion matrix may give you a lot of information but a concise metric may be preferred sometimes.

l Precision

precision = (TP) / (TP+FP)

TP represents the count of true positives while FP represents the count of false positives.

The most basic way of having perfect precision is to make a single positive prediction and ensure that it is correct. When precision is 1/1 it is equal to 100%. This, though, will not be very helpful or useful as the classifier would ignore all but one positive instance.

l Recall

recall = (TP) / (TP+FN)

# Finding precision and recall

precision_score(y_test,prediction)

recall_score(y_test,prediction)

It is convenient to combine both precision and recall into one single metric known as the F1 score, particularly if one needs a straightforward way to analyze and compare two classifiers. The F1 score is also known as the consonant mean of recall and precision.

# To compute the F1 score, we just simply have to call the f1_score() function:

f1_score(y_test,prediction)

/*#calculating the f1 score print("\nf1 score")

f1_score(y_test,prediction)

Confusion matrix

f1 score

array([1. , 0.94117647, 0.95652174])

*/

The F1 score favors those classifiers that have a similar precision and recall. This is not always what is needed and in some contexts, the focus may be on precision whereas, in some contexts, it may be on recall.

This can be better understood with an example. Let’s say a classifier is trained to detect videos that are safe to watch for kids. Here, a classifier that suggests only safe videos (i.e. high precision) and rejects many good videos (i.e. low recall) would be preferred over a classifier that has a higher recall rate and lets some terrible video suggestions show up in the results (in such instances a human pipeline may need to be added to check the video selection of the classifier).

In another instance, if one trains a classifier to detect and catch shoplifters on surveillance cameras, it is considered fine if the classifier has 99% recall even if it only has 30% precision. This means that there may be a few false alerts to the security guards but this will ensure that almost all shoplifters get caught. Having it both ways is not possible as increasing recall reduces precision and vice versa. This phenomenon is known as the precision/recall tradeoff. A classifier performs its functions differently for different threshold values. This means that a positive or negative prediction can be changed or altered by setting a different threshold value. To know more about Machine Learning algorithms and functions you can check out the course Advanced PGP in Data Science and Machine Learning (Full Time) offered by NIIT.

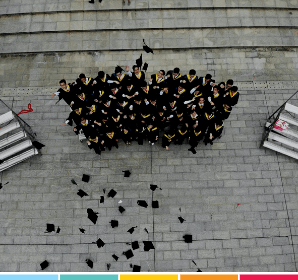

Advanced PGP in Data Science and Machine Learning (Full Time)

Become an industry-ready StackRoute Certified Data Science professional through immersive learning of Data Analysis and Visualization, ML models, Forecasting & Predicting Models, NLP, Deep Learning and more with this Job-Assured Program with a minimum CTC of ₹5LPA*.

Job Assured Program*

Practitioner Designed

Sign In

Sign In